Dec 18, 2025

Virtual production is powerful; however, it can also be unforgiving. The moment the volume powers up or the stage is locked, the clock is ticking, and every minute costs money.

Environments need to be ready, assets need to behave, cameras need to hit their marks and performance, lighting and playback all have to line up. If anything slips, you're burning budget just to stand still.

The reality for most teams is that there's never quite enough time to:

Previs complex scenes properly before you're on stage.

Turn quick concept art into navigable, testable shots.

Capture performance data without a full mocap footprint.

Build, scan and update environments at the speed of the schedule.

Fix lighting and continuity issues once the set has been struck.

Thats where a new generation of AI-native tools comes in. They compress the slowest and most fragile parts of the workflow: planning, asset prep, performance capture, worldbuilding, and final relighting. They help you get to "good enough to shoot" faster, and keep your options open when the schedule inevitably changes.

From idea to stage: smarter planning and virtual cameras

Most problems in virtual production start long before anyone stands on a volume. If you can plan better, scout smarter and test coverage early, everything downstream gets easier.

Lightcraft Jetset is a good example of that shift. Running on a phone or tablet, it turns your device into a real-time VP tool for stage planning and virtual camera operation. You can walk through a space, frame shots, and see your live camera feed composited into 3D environments as you move. That means:

Directors and DPs can explore blocking and lens choices during tech scouts, not just once they're on an LED stage.

VP supervisors can test whether a shot is even practical before committing time, crew and budget.

It's essentially a lightweight rehearsal version of your stage, in your pocket.

Moonvalley takes the same idea of fast camera exploration and applies it to 2D source material. Its Marey model turns single images or simple scenes into navigable 3D spaces. Instead of staring at a storyboard and guessing how a dolly or crane move might feel, you can:

Fly a virtual camera through a still

Design precise paths and timing

Share those moves with the VP team as a clear reference

That closes the gap between concept and volume. The creative team can work in the language they already use, frames, mood boards, concept art, and still deliver something spatially meaningful to the VP crew.

To keep all of this aligned, Intangible adds a browser-based spatial layer. Think of it as a shared sandbox where you can block out scenes, drop in assets, sketch camera paths and iterate visually with clients and collaborators. Generative tools sit on top of that spatial understanding, helping you experiment with lighting and layout, without needing to be a full-time 3D artist. For virtual production, that means everyone is looking at the same scene, not just imagining it from different decks.

Performance and characters: from mocap to digital humans

Once the world and cameras are blocked, you need believable people moving through it. That’s another area where AI is compressing timelines.

Movin3D focuses on motion and spatial understanding using a single LiDAR-enabled device. No suits, no big mocap volume: you can capture full-body performance in minutes. For VP teams, that’s ideal for:

Blocking stunts and action beats in rehearsal

Generating quick previs for complicated scenes

Testing whether a performance reads with the planned camera before scheduling full-scale capture

It turns motion capture into something you can do on more stages, more often.

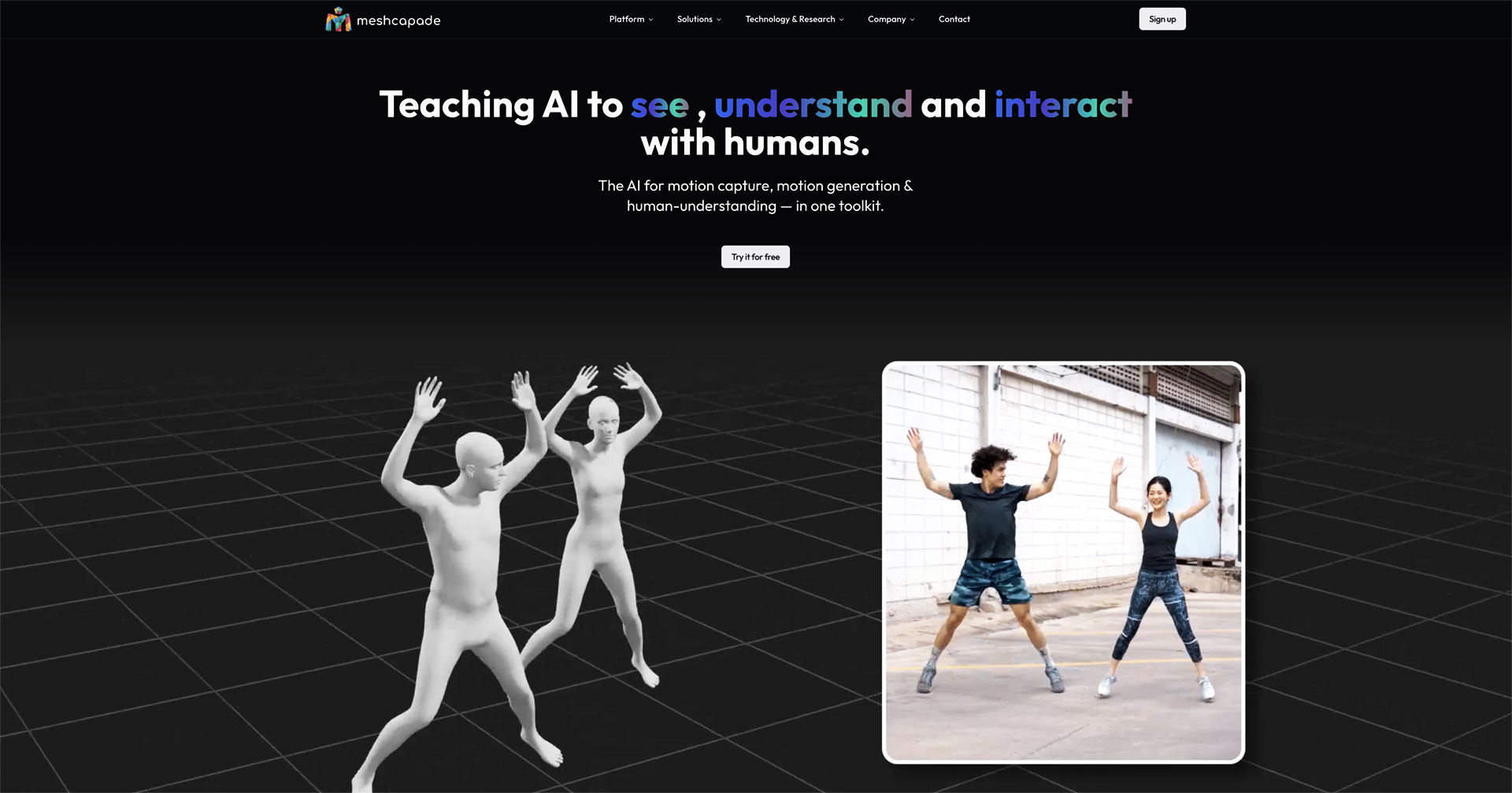

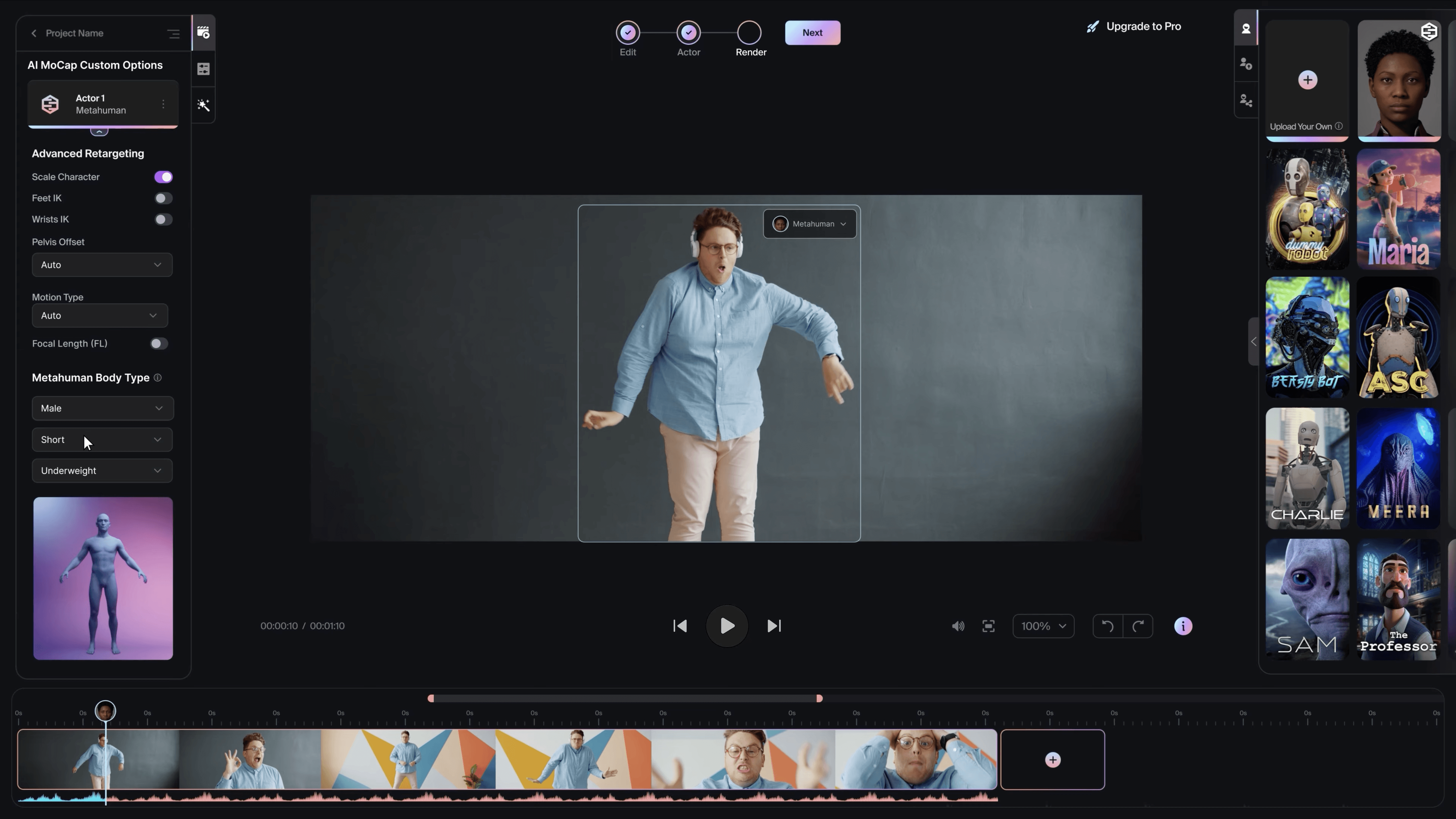

On the character side, Meshcapade gives you realistic digital humans on tap. Feed it photos, scans or other inputs, and it generates rigged 3D characters that behave consistently across shots and projects. Once those avatars exist, they can become:

Digital doubles for tricky setups or safety-critical stunts

Background actors for crowd extension

Consistent stand-ins for lighting, reflections and techvis

Layered on top of that, Wonder Dynamics (now part of Autodesk as Flow Studio) automates much of the grunt work in putting CG characters into live-action plates. It tracks actors, infers performance data and helps align animation and lighting to the plate. In practice, that lets you:

Turn rehearsal or test footage into working character shots

Validate creature and character beats early in the process

Save full hero animation passes for the shots that really need them

Together, these tools turn performance and character work into something you can iterate on constantly, instead of a one-shot pipeline that's too expensive to change.

Worlds, scans and compute: building the backbone

Virtual production environments need to be accurate, flexible and performant, especially when they’re based on real locations.

World Labs sits on the generative side of that backbone. Marble, its world model, turns text prompts, reference images, video clips or rough 3D layouts into spatially consistent 3D worlds you can move through and edit. In practice, lookbooks and location photos can become explorable sets rather than just reference decks, with outputs like Gaussian splats and meshes that drop into real-time engines and offline renders. For VP teams, it shortens the distance between concept art and shoot-ready environments, especially for smaller stages and hybrid LED/green-screen workflows.

Xgrids the anchors those worlds in reality. Its Lixel handheld LiDAR scanners and pipelines are designed for fast, precise reality capture, turning physical spaces into high-quality digital twins in a short time. Those scans feed straight into 3D workflows, giving VP teams:

Clean, consistent world geometry for previs, techvis and final environments

A single source of truth for sets, streets and interiors

A way to keep location-based VP aligned across multiple shoots and seasons

On the compute side, Xgrids is also leaning into formats and infrastructure for modern 3D representation, like 3D Gaussian Splatting. The upshot is that heavy, volumetric scenes can be processed and served at scale without you having to reinvent the infrastructure.

Paired with Intangible, Moonvalley and Jetset, you end up with a pipeline where ideas become navigable worlds quickly, and those worlds stay accurate all the way to final.

Beeble: Treating real footage like CG

All of that gets you to the same place: a shot with real performances, virtual or physical environments, and a director who still wants options.

This is where Beeble comes in.

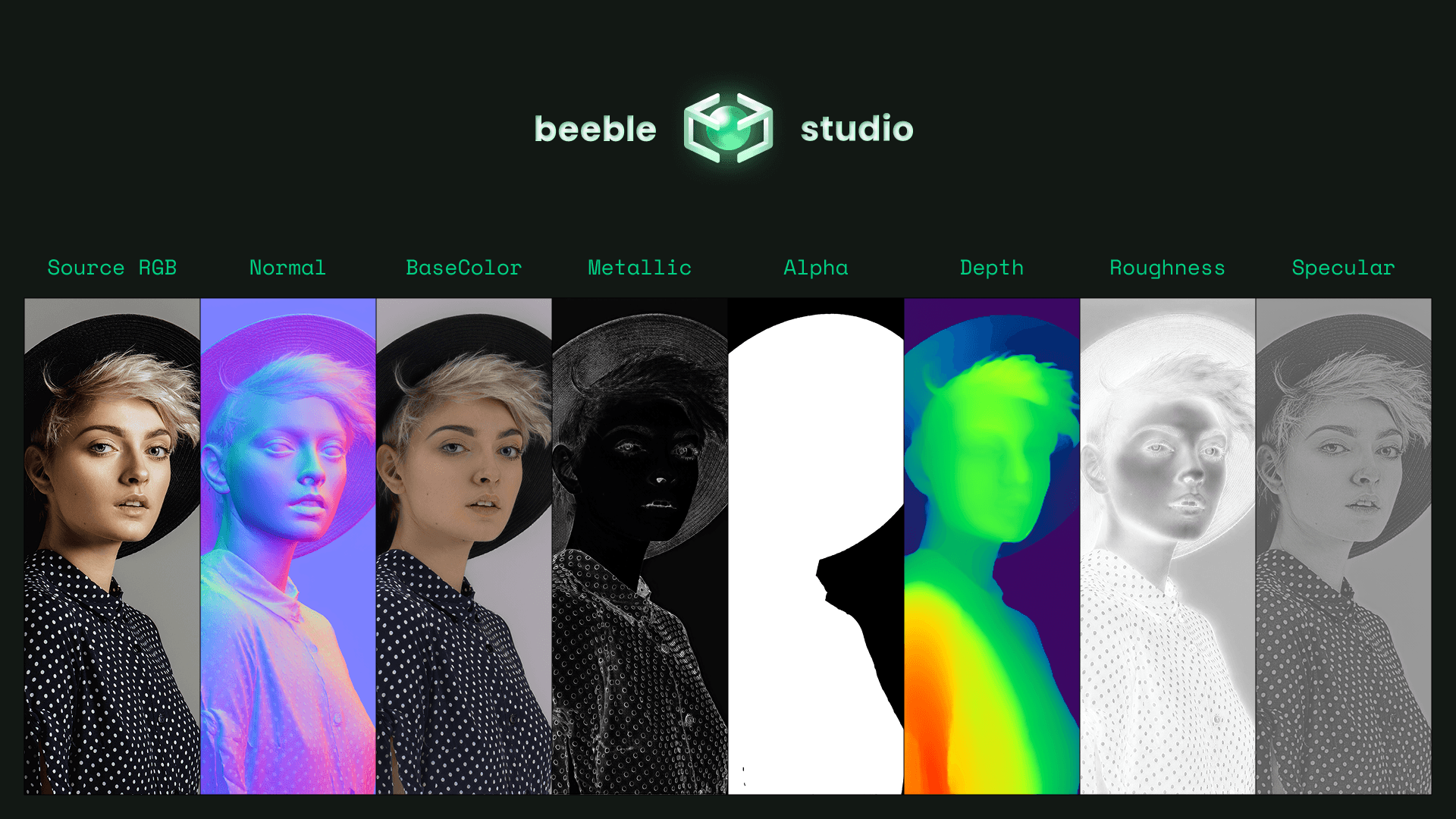

Beeble takes live-action footage and generates full physically based rendering (PBR) passes, normals, depth, albedo, roughness, metallic and more. In other words, it reconstructs enough of the scene's geometry and material response that you can relight it like CG.

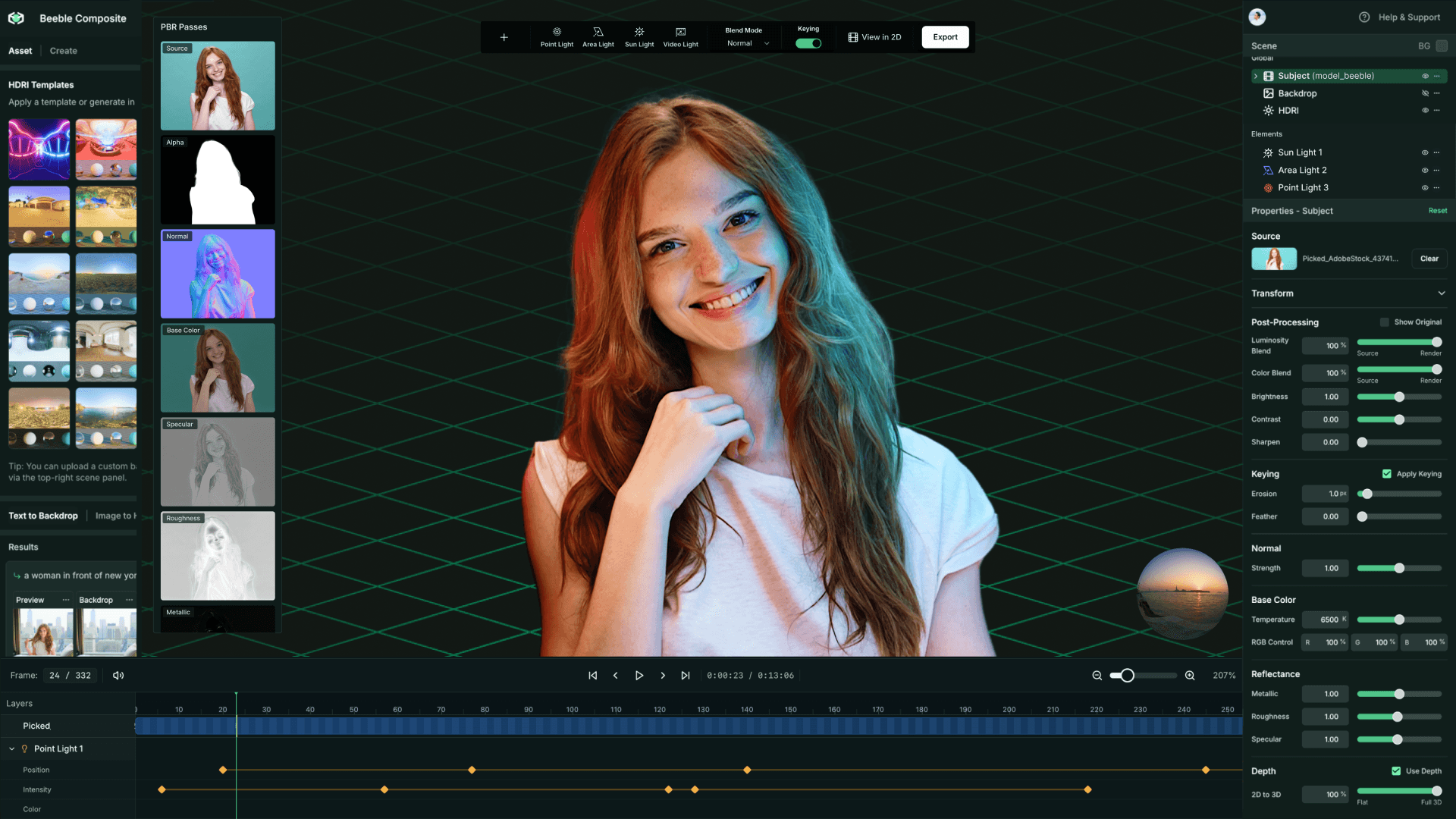

In a virtual production context, that changes what "locked" really means:

If volume content and plate don't quite match, you can rebalance the lighting in post instead of booking another day on the stage.

If a director wants to try a mood shift later in the day, more contrast, different ambience, you can explore that look interactively.

If you're blending location photography with LED environments, you can use those PBR passes to create more physically consistent composites.

With Beeble Studio, all of this happens locally on your own GPUs, in true 4K, with unlimited processing and on-prem security. That matters when you're dealing with unreleased shows, high-profile talent or confidential sets: the footage never leaves your facility, but you still get the speed and flexibility of AI-driven relighting.

In short, Beeble is the bridge between virtual production and traditional post, letting you treat captured footage with the same freedom you expect from CG renders.

Putting it together: an AI-native VP stack

None of these tools replaces a director, a DP, a VFX supervisor or a stage crew. What they do, collectively, is compress the distance between intention and image.

A modern AI-assisted VP stack might look like this:

Plan and explore with Intangible, Moonvalley and Lightcraft Jetset.

Capture and perform with Movin3D, Meshcapade and Wonder Dynamics.

Build and ground worlds with World Labs' generative environments and Xgrids scans and scalable 3D infrastructure.

Finish and extend with Beeble, relighting and refining footage as if it were CG.

The teams with an edge in virtual production won't be the ones ignoring these tools; they'll be the ones squeezing real value out of them, using AI to take the friction out of planning, prep and fixes when the clock is ticking.

If you're already experimenting with virtual production and want more control over how you light and relight your worlds, Beeble is built to slot straight into that toolkit. Treat real footage like CG, keep your data where it belongs, and use AI to move faster when it matters most.