Jan 15, 2026

Watch full video

When London-based electronic duo Red Mercury started work on their I Still Remain music video, they didn't have a studio, a VFX team, or a budget – just a DSLR, a £20 blue sheet, and a one-bedroom flat.

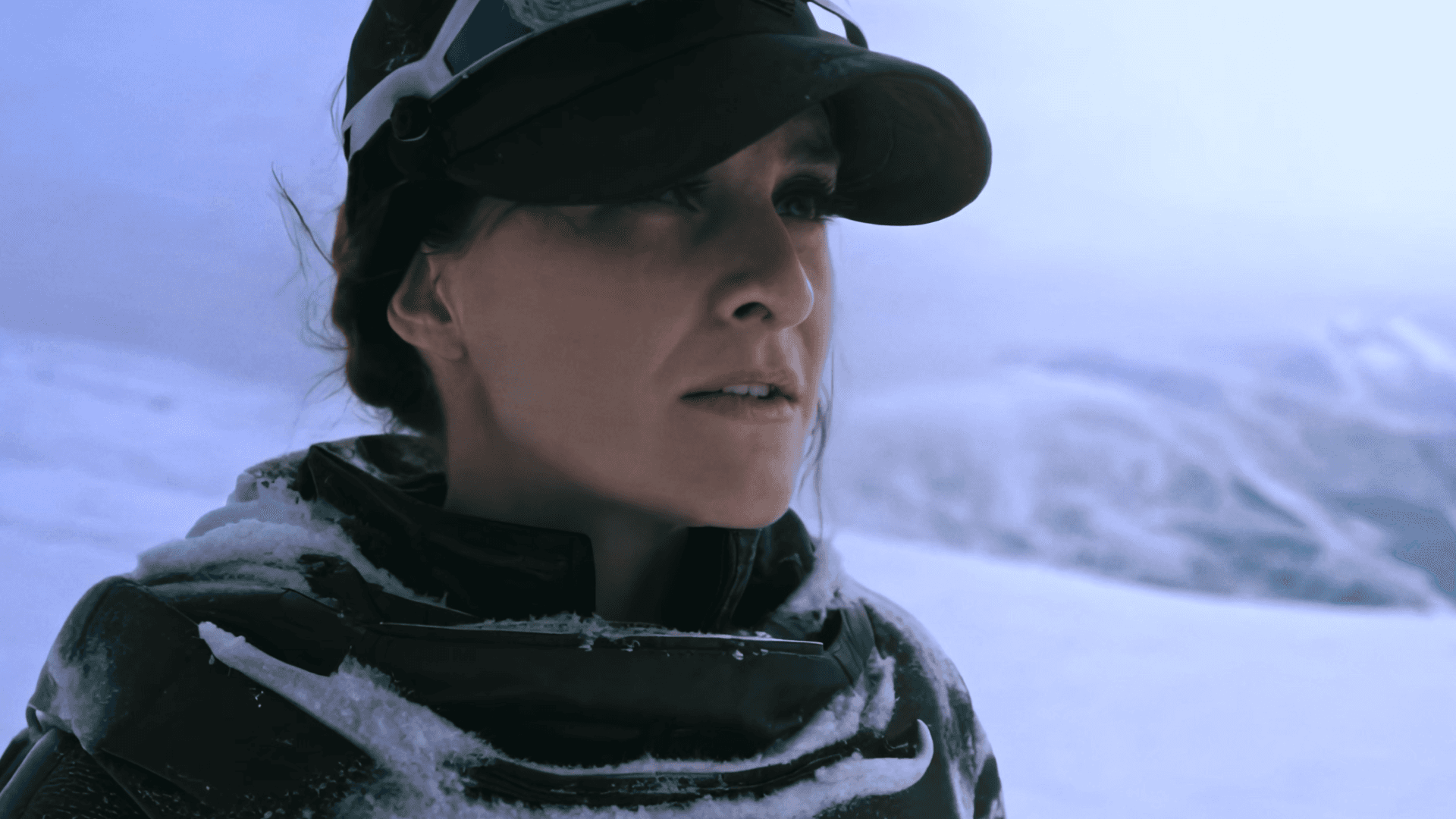

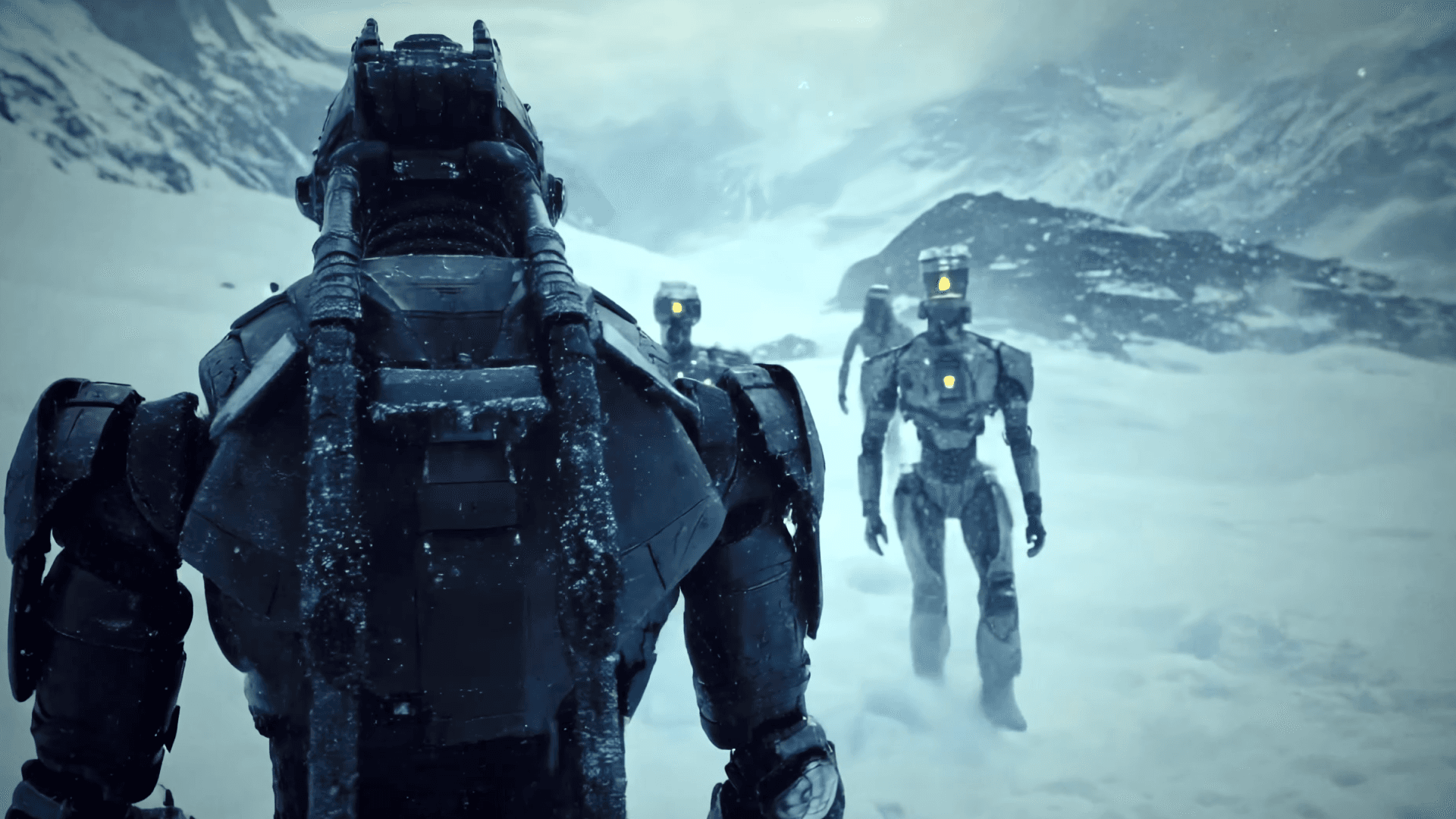

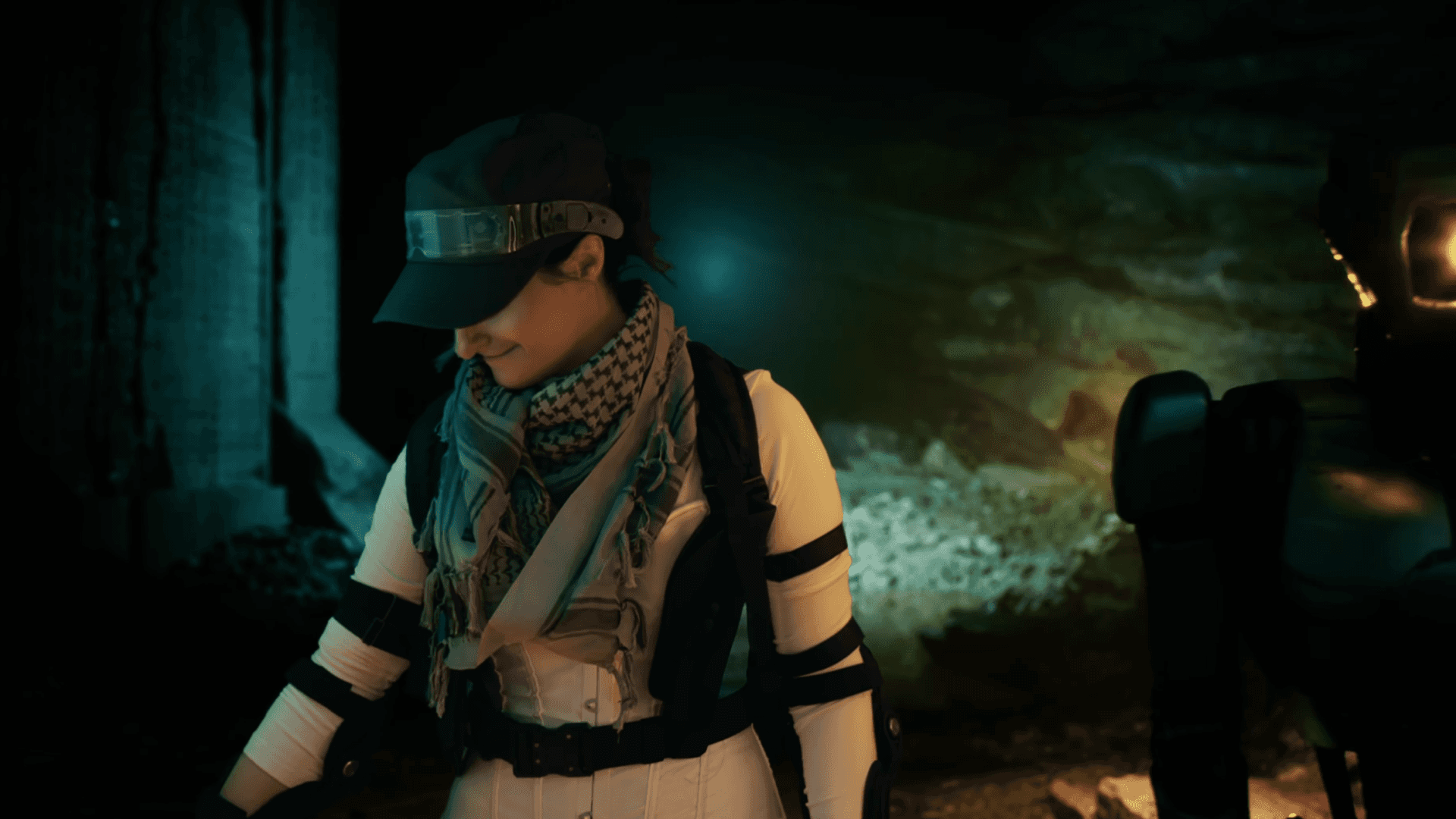

Nine months later, they'd crafted a cinematic sci-fi short about cloning, identity, and survival in a hostile city, filled with Unreal Engine environments, gunfire lighting, swarms of creatures, and a grounded central performance. The key was using AI not to generate content, but to handle the behind-the-scenes VFX work that usually eats up time and resources.

The key was a streamlined, flexible pipeline. Performances were shot simply, isolated cleanly, and then shaped in post — with lighting treated as something that could be designed after the fact, not locked in on set. Rather than forcing live-action footage to conform to 3D scenes inside Unreal, the team found greater control by combining Unreal renders with relit performances directly in Beeble's web editor, achieving cleaner edges, more consistent lighting, and faster iteration. That shift turned Beeble into the backbone of the production, handling rotoscoping and video relighting so a solo creator could work at a level normally reserved for full post houses.

Producer Steve Gregory and vocalist and songwriter Isobel Crawford shared with us how the project came to fruition.

Behind the scenes

Can you describe Red Mercury?

Isobel Crawford: We're an electronic band. We specialise in techno and drum and bass. Steve and I met in 2020, which is when we started Red Mercury together, and now we're husband and wife. I have a musical background and bring vocals and songwriting, combined with Steve's production.

During lockdown, when we couldn't film outside, we started exploring virtual production options, and over the last three or four years, our videos have been more focused on VFX and virtual production.

The project

Your latest release is also a short film. How would you describe I Still Remain – musically and visually?

Isobel: When we start a project, we discuss what the theme might be, the message. For this one, we were looking at ideas like identity, cloning, not losing yourself in a busy environment, and tying them to adversity, resilience, rebuilding, and being a phoenix from the fire. And that complemented the video. I'm the main character, and it goes from me doing my own thing to suddenly being more “badass.”

What was the creative process like — planning, shooting, timeline?

Steve Gregory: This one worked a bit back-to-front. We actually wrote the music after we'd done the video; I was working to temp music for most of that time. The video took nine months to make, working on it every night and on weekends.

The first three months were spent trying lots of different tools to figure out what was even possible. I must have tested 30 different pieces of software, including building my own, to build a pipeline that reliably generated shots.

We did three or four separate shoots, and it was literally us in a one-bedroom flat with wide lenses because I couldn't stand back far enough. And I even fit a 12-foot crane into the flat.

What were the hardest parts of making live-action scenes inside Unreal environments?

Steve: Beeble actually enabled us to do this project. I don't think we would have been able to produce it otherwise. I didn't want to commit unless I knew it was technically possible; I was really concerned it would look stuck-on and cheap, because that's often what happens when you try to integrate a real person into CGI: the lighting's wrong, the edges around it don't look right.

Rotoscoping is amazingly complex and has been largely manual for years. And relighting is also almost impossible to do well when you shoot under a single lighting source and have to match it to another; it's really hard.

For me, Beeble became a huge time-saver and an essential tool for combining live-action footage with 3D. It was critical to what we were doing.

Beeble in production

Can you walk us through a typical shot – where exactly does Beeble slot in?

Steve: For I Still Remain, the pipeline was deliberately simple. We shot on a DSLR against a blue screen in our flat, sometimes with an iPhone mounted above the camera for tracking reference. The footage then went straight into Beeble's web editor, which handled the rotoscoping and keying, and let us relight Izi to sit naturally inside the Unreal environments – from overall mood and direction to interactive details like gunfire flashes.

We brought those Beeble passes into Unreal Engine to build and animate the wider world, occasionally sending renders back through Beeble for final lighting tweaks.

As we grew more confident in Beeble's ability to remove backgrounds and relight footage, we even began dropping the blue screen for some setups, focusing on performance and camera movement, knowing we could refine the lighting in post.

There were three kinds of shots: simple shots with no movement, more complex shots in which the camera moves around the subject, and faked moving shots. The moving shots are more difficult because if the background doesn't track, you get sliding, and it doesn't look real.

Beeble didn't support camera tracking at the time, so finding a camera-tracking solution that would integrate was a challenge. I tried Lightcraft, and in the end, I used Wonder Dynamics and simplified shots.

At a high level, the pipeline was the following: shoot on bluescreen, take into Beeble and rotoscope to a transparent background; then either relight and export into Unreal, or what I ended up doing was rendering stuff in Unreal and combining it with the Beeble footage, because I found the Beeble web editor produced much better results than taking it into Unreal.

Is there a sequence where Beeble made the biggest difference?

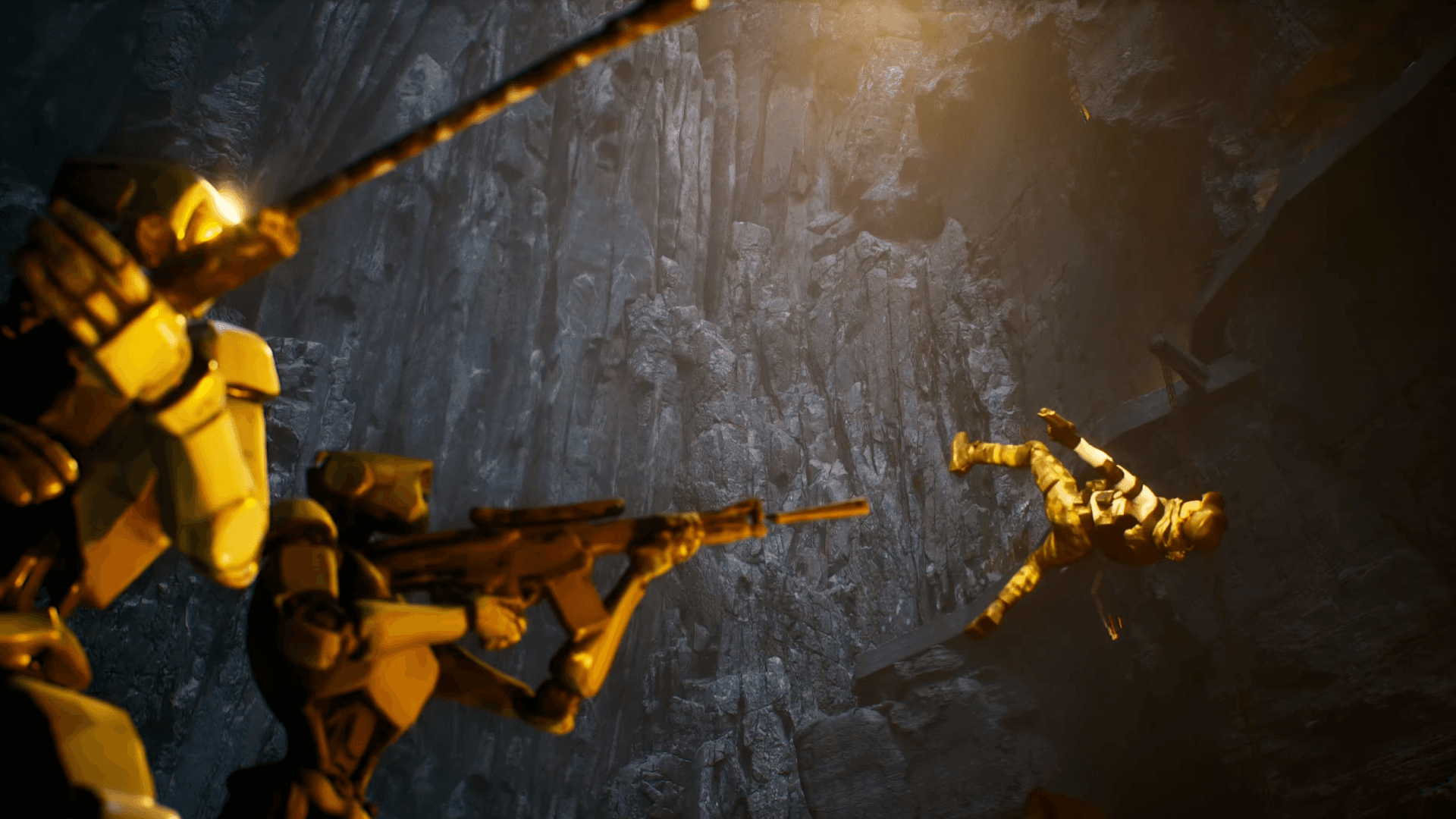

Steve: Shots on the bridge: gunfire going off in Unreal, lights flashing, and the footage being relit with those gun flashes. There's no way I could have done that with anything else.

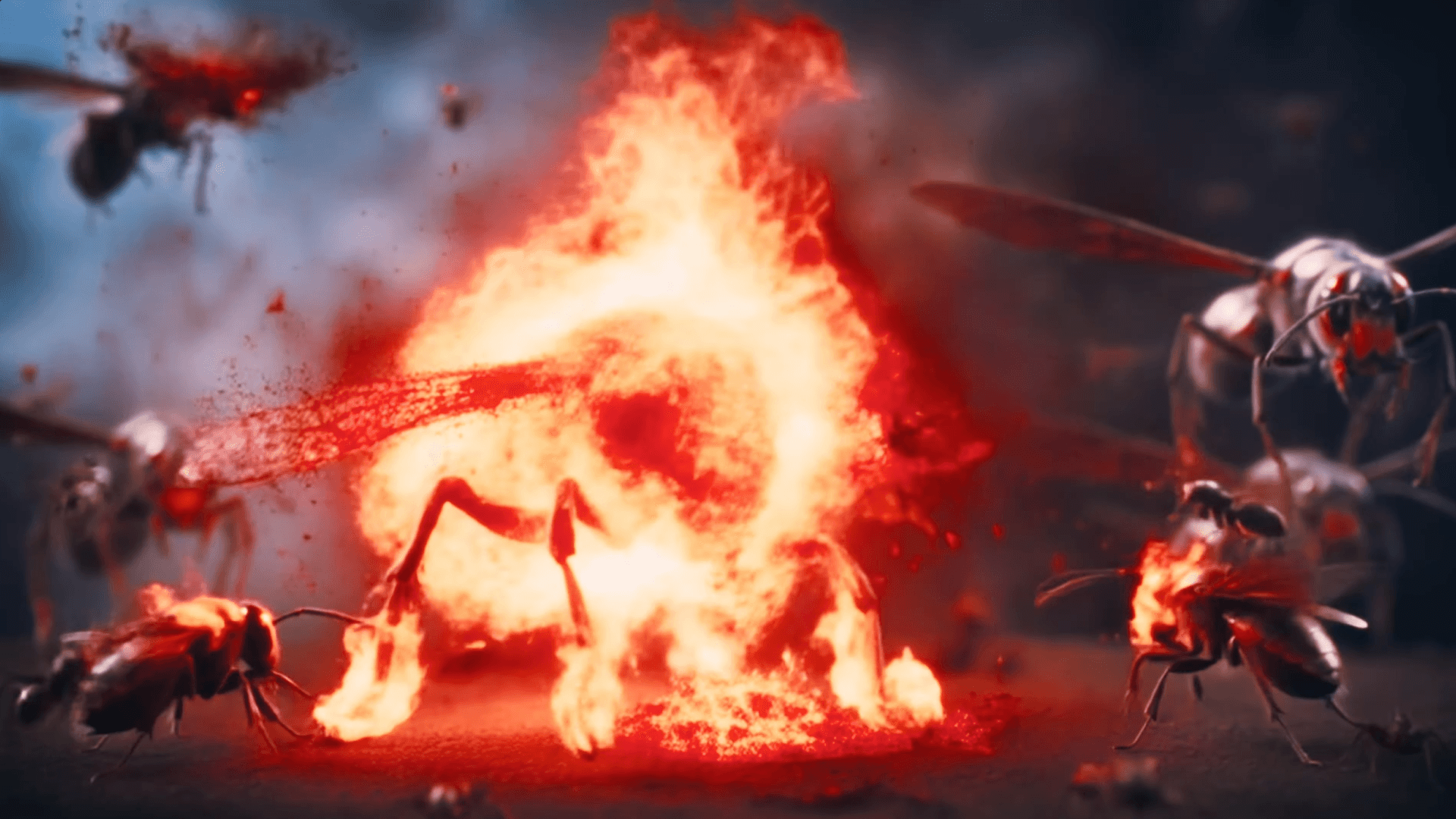

Also, the flying bees: originally, I was going to animate them in 3D, but time-wise, it was too much. I used Kling to generate some animation, then used Beeble to remove the background and relight, and I flew the video through it. It turned what would have been a complex 3D shot into something I could produce very quickly, and it looked perfect.

What would you say to other artists who want big sci-fi visuals on small-team resources?

Steve: Get started. I Still Remain was made with no budget, a £20 blue sheet, a DSLR camera, and a lot of evenings and weekends. The barrier to entry has never been lower – the main cost is your commitment.

The hardest part isn't gear, it's finding software you can trust. For us, Beeble became one of those tools: a huge time-saver and a crucial component in transforming our footage into something that looks far bigger than the room it was shot in.

//

Looking for full control over lighting in post?